Deep learning is a technique used to solve complex problems such as natural language processing and image recognition. We are now able to solve these computational problems quickly, thanks to a component called the Graphics Processing Unit (GPU). Originally used to generate high-resolution computer images at fast speeds, the GPU’s computational efficiency makes it ideal for executing deep learning algorithms. Analysis which used to take weeks can now be completed in a few days.

While all modern computers have a GPU, not all GPUs can be programmed for deep learning. For those who do not have a deep learning-enabled GPU, this post provides a step-by-step layman’s tutorial on building your own deep learning box. Our deep learning box is essentially another computer equipped with a deep learning-enabled GPU. The GPU is the main difference between a regular computer and a deep learning box.

First, check if your GPU is listed on this site. If it is, it means you already have a GPU capable of deep learning, and you can start from the second section below on software installation. If your computer does not have a suitable GPU, read on to find out about how you can get the required components for less than $1.5k.

Purchase Hardware

This section lists the main components of your deep learning box. If you have no computing knowledge or you want to save time, you may choose to purchase these in-store and pay a nominal fee for assistance to assemble the components. Prices listed below are in USD as of March 2016. The total cost was $1285, which we funded using prize money from competitions.

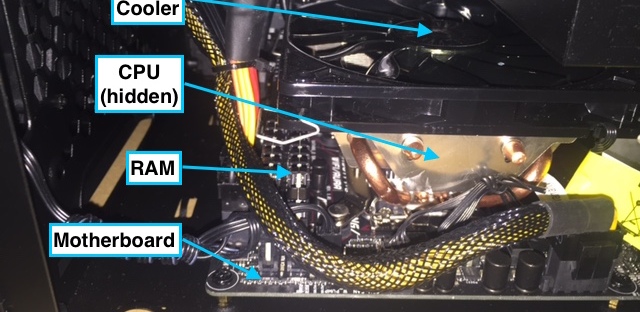

Motherboard

Distributes power to the rest of the components and enables communication between them. It is a printed circuit board.

Central Processing Unit (CPU)

Performs general computational operations, like a brain. It is a chip on the motherboard.

$410 (bundle) – Asus B150i Pro Gaming ITX (Motherboard) + Intel i5 6600k (CPU)

Random-Access Memory (RAM)

Enables quick retrieval of information, like working memory. It is another fixture on the motherboard.

$115 – 16GB (2x8GB), Corair Vengeance DDR4 DRAM 2400MHz

Graphics Processing Unit (GPU)

Performs intensive computations for deep learning. Our chosen GPU, the GTX 970, has a small form factor so that we could fit it into a portable case. An older GPU, the GTX 770, was able to train a deep learning model with 5120 images on 20 iterations within 33 seconds. Hence, our machine is expected to perform even faster. Nonetheless, you can also get a 1070X or 1080X now as they’ve become more affordable.

$415 – 4GB, Gigabyte GTX970 ITX GDDR5

Power Supply Unit (PSU)

Converts electricity from the mains to power the machine.

$75 – 550W, Cooler Master G550M

Hard Disk Drive (HDD)

Enables lasting storage of information, like long-term memory.

$160 – 4TB, Western Digital Blue 5400 RPM

HDD affixed to the internal ceiling plate of the case.

CPU Cooler

Fans the CPU to prevent it from overheating.

$35 – Cooler Master GeminII M4

Case

Protects and holds all the components. Make sure that it has a USB port.

$50 – Cooler Master Elite 110

Assembly

$25 – in-store service to secure and wire the components.

After the box has been assembled, connect it to a standalone monitor, keyboard and mouse.

Install Software

Step 1: Install Ubuntu

Ubuntu is the operating system (OS) on which your deep learning box will run, much like Windows or Mac. A straightforward way to install the OS would be to use another computer to download the OS file onto a USB stick. Then, plug the USB stick into your deep learning box and install the OS from there.

Follow the links for instructions on how to download the OS file onto the USB stick from a Windows or Mac. Once you boot Ubuntu on your deep learning box from the USB stick for the first time, you’ll be brought through the installation process.

Step 2: Installing the deep learning stack

Nvidia is one of the companies that produces GPUs and they have developed a deep learning framework called Digits. Before we can get Digits running, we have to install a few software up the stack.

We need to install a driver, which acts as an access key for the deep learning software to harness the GPU for its computations. To install it, press Ctrl+Alt+T to open the Terminal window.

As of Ubuntu 14.04, the Nvidia drivers are part of the official repository. Even though the version maintained in the repository might not be the newest compared to those on the official Nvidia website, they are usually more stable and have been tested by the community against different software. We would recommend using the drivers from the Ubuntu repository.

To search for the latest driver to install:

apt-cache search nvidia

You should be able to find a package that looks like “nvidia-xxx” where xxx is the version number of the driver. Choose the latest one. In this example we would be using nvidia-352.

Then, type in the following commands:

sudo apt-get install nvidia-352 nvidia-352-uvm sudo apt-get install nvidia-modprobe nvidia-settings

The additional packages “nvidia-modprobe” and “nvidia-settings” allow easier installations of future packages to Ubuntu.

Step 3: Install CUDA and Digits

CUDA is a platform that enables the GPU to execute tasks in parallel, increasing its efficiency. Digits is the interface for deep learning analysis. You use this interface to upload data, train models and make predictions. To install them, run the following commands:

CUDA_REPO_PKG=cuda-repo-ubuntu1404_7.5-18_amd64.deb && wget http://developer.download.nvidia.com/compute/cuda/repos/ubuntu1404/x86_64/$CUDA_REPO_PKG && sudo dpkg -i $CUDA_REPO_PKG

ML_REPO_PKG=nvidia-machine-learning-repo_4.0-2_amd64.deb && wget http://developer.download.nvidia.com/compute/machine-learning/repos/ubuntu1404/x86_64/$ML_REPO_PKG && sudo dpkg -i $ML_REPO_PKG

apt-get update

apt-get install digits

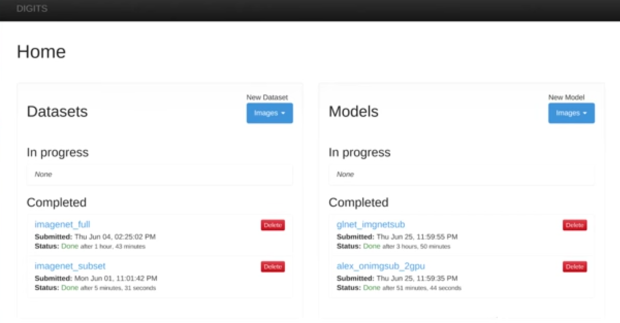

When the installation is successful, fire up your browser and go to http://localhost. You should see a webpage like the one below:

If you run into errors, refer to the troubleshooting section in the official installation guide.

Run Analysis

Nvidia Digits is a user-friendly platform that allows you to train prediction models using deep learning techniques. The video below provides a comprehensive demo on the entire process, from uploading data to making predictions:

Conclusion

That’s it. You have just built your own deep learning box. If you’re new to deep learning, you can also test the techniques in the cloud first, using Google’s Cloud Machine Learning platform. However, using your own GPU to run analysis might allow you more speed and flexibility to tweak parameters in the long-run.

Did you learn something useful today? We would be glad to inform you when we have new tutorials, so that your learning continues!

Sign up below to get bite-sized tutorials delivered to your inbox:

Copyright © 2015-Present Algobeans.com. All rights reserved. Be a cool bean.

Hi

Very helpful article. Could you help me with two queries please:

1) Do you have an updated list of hardware recommendation? I’m interested to build a box myself but noticed that this article was written exactly two years ago?

2) Any shop in Sim Lim (one more trustworthy) that you would recommend to help assemble such a box?

Thanks in advance

LikeLike

The new card that I currently use and would recommend is GTX 1080Ti. There is a small form factor version of it.

https://www.pcgamer.com/zotac-shrinks-nvidias-gtx-1080-ti-for-small-form-factor-gaming/

Fuwell@ sim lim is such shop.

LikeLike

thanks @HHCHIN87, appreciate it. GTX 1080Ti is kinda expensive at >S$1000ish

LikeLike

Great article! Thanks. I was wondering if you new about companies that could offer this service? I have been trying to get a company that can manage to do this (NVIDIA used to setup dev boxes) but can’t find any.

Thanks,

James

LikeLike

I’ve been attempting to find information about the relative importance of the various parts. Seems like the graphics card is the most important component, but what about the other parts, ie. memory speed, hard drive capacity/type etc.?

So far, my machine is a Dell R900 Poweredge with 4 quad-core processors and 64 Gb memory (600 mHz). I’m hoping that running with higher speed (SSD) drives will help make up for the lower speed memory and lower speed processors. It’s got many cores/threads, but overall it’s much slower then modern i5/i7 type computers.

Is there some kind of a benchmark that would help to identify potential bottlenecks in a system related to deep learning? Perhaps it’s better just to run the models and look for i/o issues.

anyways, thanks for the article. excellent info.

LikeLike

If you want to dive into deep Learning it will be a necessity work with a titan x. You will see why a 4 GB ram gpu card is not enough after some study. First of all you will have a lesser batch size. So you have to deal with other hyper parameters like learning rate to achieve the model convergence. Sometimes it will never converge with small batch size. So some deep models architectures will never be in your scope like resnets or vgg.

LikeLike

Hi FSR,

At work, I run K40s and will likely move to the pascal cards once things stabilize. Agree that setup this isn’t meant for industrial grade deep learning but its more for exploring and playing with the different knobs of the models. We will have a follow on article using the box in some of the experiments.

LikeLike

Great article! Thank you very much! Could you please name the motherboard that you chose? That seems to be the only info being missed. Thanks again! -Richard

LikeLike

Hi Richard,

The motherboard is, ASUS B1501 Aura. It supports the LGA 1151 CPU socket.Thanks

LikeLike

hello,it is a great essay! however ,I am a poor student from china , and i want to study deep learning by myself. So, i want to buy a computer with:

cpu i7 6700k

gpu gtx970

ram 16g ddr4

total count may be 6000 rmb or 1000 dollars.

And i want to realize the deepid2 or deepid3 as practice ,so i am curious can i use these hardwares to implement the procedure? Or i need a gtx980 or taller, which i an’r afford.

what is more, my teacher buy a ThinkStation with quadro k4200,can i use it to realize the deepid?

Thank you your essay again! And please forgive my poor english

LikeLike

Hi Pang,

You can use your laptop’s GTX 970m to train deepID which has implementations in Caffe. But the laptop’s performance might be slower than the GTX 970 featured here as the GTX970m is a mobility version of the GTX 970. Comparison as follows. http://gpuboss.com/gpus/GeForce-GTX-980-vs-GeForce-GTX-970M

However it is better to use a non-laptop based solution, such as a full desktop computer, to train as such GPU intensive processing will cause the laptop to overheat.

LikeLiked by 1 person